AWS S3 Protocol Application Scenarios

File Browser Tools

Function Description

S3 Browser is an easy-to-use and powerful free Amazon S3 client. It provides a simple web service interface for storing and retrieving any amount of data at any time from anywhere. Through relevant configurations, you can directly manipulate files in the Bucket of US3 object storage, such as uploading, downloading, and deleting files.

Installation and Use

Applicable Operating System: Windows

Installation Steps

1. Download the installation package

Download link: http://s3browser.com

2. Install the program

Go to the download page, click Download S3 Browser, and follow the prompts to install.

Usage

1. Add a user

Click on the Accounts button at the top left, and in the drop-down box, click Add new account.

In the Add new account page, the items to be filled in are described below:

Account Name: User-defined account name.

Account Type: Choose S3 Compatible Storage.

REST Endpoint: Fixed domain name, fill in according to the AWS S3 protocol instructions. For example: s3-cn-bj.example.com

Signature Version: Choose Signature V4.

Access Key ID: Api public key, or Token. For information on how to obtain it, please refer to the instructions for S3’s AccessKeyID and SecretAccessKey.

Secret Access Key: API private key. For information on how to obtain it, please refer to the instructions for S3’s AccessKeyID and SecretAccessKey.

Encrypt Access Keys with a password: Please do not check.

Use secure transfer (SSL/TSL): At present, only China-Beijing II, China-Hongkong, Vietnam-Ho Chi Minh, Korea-Seoul, Brazil-Sao Paulo, USA-Los Angeles, USA-Washington regions support HTTPS, other regions please do not check.

Detailed configuration as follows:

Click on the lower left corner of the Advanced S3-compatible storage settings configuration for the signature version and URL style.

After the modification is successful, click Close to close the current settings, and click Add new account to save the configuration, then the user is successfully created.

2. Object operation

Console Function Description

- Currently, the slice size only supports 8M, and the specific configuration is as follows: 1. Click the Tools toolbar on the top, select Options in the drop-down list, select General, and in the pop-up page, set Enable multipart uploads with size (in megabytes) to 8.

- Configure Using a Proxy: Go to Tools > Options > Proxy, select Use proxy settings below, configure the Address and Port, and it is recommended to disable secure transfer (SSL/TLS) in proxy mode.

Special Notes:

1. When using third-party cloud storage services, only fixed-region configurations are supported; cross-region replication is not available. 2. S3 Browser does not support self-replication of files with the same name within the same bucket, but files can be re-uploaded for overwriting.

Common Questions

1. Upload files more than 78G, report Error 400, show slice size as 16MB

Problem reason:

The s3 slice limit is 10,000. When setting the slice to 8M, it can only meet the upload of files below around 78G. If the file size exceeds 78G, it will automatically adjust the slice size to ensure that the slice number is less than 10,000. Currently, the us3 backend s3 protocol only supports 8M slices. If the slice size is wrong, a 400 error will be returned.

Solutions:

- Use the us3cli tool for uploads (us3 protocol).

2. Errors in Updating File Permissions for Read Permissions and Write Permissions

Problem reason:

Currently, file-level permission updates in us3 only support Full Control, Read, and Write. Similarly, bucket-level permission updates are also limited to Full Control, Read, and Write. However, updating Read Permissions and Write Permissions will not trigger an error.

Solutions:

- Use the Make public and Make private buttons in the Permissions section at the bottom of the interface to toggle between public and private space permissions.

Network File System S3FS

Functional Description

The s3fs tool supports mounting Bucket locally and directly operating objects in object storage like using a local file system.

Installation and Transaction Settlement

Applicable Operating System: Linux, MacOS

Applicable s3fs version: v1.83 and later

Installation Steps

MacOS environment

brew cask install osxfuse

brew install s3fsRHEL and CentOS 7 or later versions through EPEL:

sudo yum install epel-release

sudo yum install s3fs-fuseDebian 9 and Ubuntu 16.04 or later versions

sudo apt-get install s3fsCentOS 6 and earlier versions

The s3fs needs to be compiled and installed

Get Source Code

First, you need to download the source code from to a specified directory, here for /data/s3fs:

1. cd /data

2. mkdir s3fs

3. cd s3fs

4. wget https://github.com/s3fs-fuse/s3fs-fuse/archive/v1.83.zipInstall Dependencies

Install dependent software on CentOS:

sudo yum install automake gcc-c++ git libcurl-devel libxml2-devel

fuse-devel make openssl-devel fuse unzipCompile and Install s3fs

Go to the installation directory and run the following commands to compile and install:

1. cd /data/s3fs

2. unzip v1.83.zip

3. cd s3fs-fuse-1.83/

4. ./autogen.sh

5. ./configure

6. make

7. sudo make install

8. s3fs --version //Check s3fs version numberYou can see the version number of s3fs. At this point, s3fs has been installed successfully.

Note:

During the fifth step, ./configure, you may encounter the following.

Error: configure: error: Package requirements (fuse >= 2.8.4 libcurl >= 7.0 libxml-2.0 >= 2.6 ) were not met:

Solution: The fuse version is too low. In this case, you need to manually install fuse 2.8.4 or later. The installation command example is as follows:

1. yum -y remove fuse-devel #Uninstall the current version of fuse

2. wget https://.com/libfuse/libfuse/releases/download/fuse_2_9_4/fuse-2.9.4.tar.gz

3. tar -zxvf fuse-2.9.4.tar.gz

4. cd fuse-2.9.4

5. ./configure

6. make

7. make install

8. export

PKG_CONFIG_PATH=/usr/lib/[pkgconfig:/usr/lib64/pkgconfig/:/usr/local/lib/pkgconfig](http://pkgconfig/usr/lib64/pkgconfig/:/usr/local/lib/pkgconfig)

9. modprobe fuse #Mount fuse kernel module

10. echo "/usr/local/lib" >> /etc/[ld.so](http://ld.so/).conf

11. ldconfig #Update dynamic link library

12. pkg-config --modversion fuse #Check fuse version number, when you see "2.9.4", it means fuse 2.9.4 has been installed successfullys3fs usage

Configure Key File

Create a .passwd-s3fs file in the ${HOME}/ directory. The file format is [API Public Key: API Private Key].

For detailed information on how to get the public and private keys, please refer to S3’s AccessKeyID and SecretAccessKey instructions.

For example:

[root@10-9-42-233 s3fs-fuse-1.83]# cat ~/.passwd-s3fs

AKdDhQD4Nfyrr9nGPJ+d0iFmJGwQlgBTwxxxxxxxxxxxx:7+LPnkPdPWhX2AJ+p/B1XVFi8bbbbbbbbbbbbbbbbbSet the file to read and write permissions.

chmod 600 ${HOME}/.passwd-s3fsPerform Mount Operation

Command instruction explanation:

-

Create US3 mount file path

${LocalMountPath} -

Get the name of the created storage space (Bucket)

${UFileBucketName}

Note: The space name does not include the domain name suffix. For example, if the US3 space name is displayed astest.cn-bj.example.com, then ${UFileBucketName}=test

-

Depending on the region of the US3 storage space and whether the local server is in the XXXCloud intranet, see Support AWS S3 Protocol Instructions

-

Execute the command.

The parameters are as follows:

s3fs ${UFileBucketName} ${LocalFilePath}

-o url={UFileS3URl} -o passwd_file=~/.passwd-s3fs

-o dbglevel=info

-o curldbg,use_path_request_style,allow_other

-o retries=1 //Error retry times

-o multipart_size="8" //The size of multipart upload is 8MB, currently only this value is supported -o

multireq_max="8" //When the uploaded file is larger than 8MB, multipart upload is adopted, currently UFile's S3

The access layer does not allow PUT single file over 8MB, so this value is recommended to be filled out

-f //means to run in the foreground, if running in the background, omit

-o parallel_count="32" //Parallel operation count, can improve multipart concurrent operations, it is recommended not to exceed 128Example:

s3fs s3fs-test /data/vs3fs -o url=[http://internal.s3-cn-bj.example.com](http://internal.s3-cn-bj.example.com/) -o passwd_file=~/.passwd-s3fs -o dbglevel=info -o curldbg,use_path_request_style,allow_other,nomixupload -o retries=1 -o multipart_size="8" -o multireq_max="8" -o parallel_count="32"Mount Effect

Run the df -h command, you can see the s3fs program run. The effect is as follows:

At this point, you can see that the files under the directory /data/vs3fs and the files in the specified bucket are consistent. You can also use the tree to execute and view the file structure. Installation command: yum install -y tree. The effect is as follows:

File Upload and Download

After mounting US3 storage, you can use US3 storage as if it was a local folder.

- Copy files to

${LocalMountPath}, which means uploading files. - Copy files from

${LocalMountPath}to other paths, which means downloading files.

Note:

- Paths not conforming to the Linux file path specification can be seen in the US3 Management Console, but will not be displayed under the Fuse-mounted

\${LocalMountPath}. - Enumerating file list with Fuse will be relatively slow, it is recommended to use directly specified to specific file commands such as vim, cp, rm.

Delete Files

Deleting files from ${LocalMountPath} will also delete the file in the US3 storage space.

Unmounting US3 Storage Space

sudo umount ${LocalMountPath}Performance Data

Write throughput is about 40MB/s, and read throughput can reach 166 MB/s (related to concurrency)

goofys

Functional Description

The goofys tool, same as s3fs, supports mounting Bucket locally and directly operating objects in object storage like using a local file system. It boasts superior performance compared to s3fs.

Installation & Usage

Applicable Operating System: Linux, MacOS

Usage Steps

Download the executable:

Use the following command to extract to the specified directory:

tar -xzvf goofys-0.21.1.tar.gzBy default, the public and private keys of the bucket are configured in the $HOME/.aws/credentials file, in the following format:

[default]

aws_access_key_id = TOKEN_*****9206d

aws_secret_access_key = 93614*******b1dc40Run the mount command ./goofys --endpoint your_ufile_endpoint your_bucket your_local_mount_dir, for example:

./goofys --endpoint http://internal.s3-cn-bj.example.com/suning2 ./mount_test:The mount effect is as follows:

To test whether the mount was successful, you can copy a local file to the mount_test directory and check if it was uploaded to US3.

Other operations (delete, upload, get, unmount)

Similar to s3fs, refer to the above s3fs operations

Performance Data

For a 4-core 8G UHost virtual machine, uploading files larger than 500MB, the average speed can reach 140MB/s

FTP Service Based on US3

Functional Description

Object storage supports directly operating objects and directories in Bucket through the FTP protocol, including uploading files, downloading files, deleting files (accessing folders is not supported).

Installation & Usage

Applicable Operating System: Linux

Installation Steps

Build Environment

The s3fs tool is used to mount Bucket locally. For a detailed installation process, refer to the content on building a network file system based on S3FS and US3.

Install Dependencies

First check if there is an FTP service locally, run the command rpm -qa | grep vsftpd, if not installed, run the following command to install FTP.

Run the following command to install vsftpd.

yum install -y vsftpdStart Local fpd Service

Run the following command to start the ftp service.

service vsftpd startS3FS Usage

Add Account

- Run the following command to create a ‘ftptest’ user and set a specific directory.

useradd ${username} -d {SpecifiedDirectory}

(Delete user command: sudo userdel -r newuser)

- Run the following command to change the ‘ftptest’ user password.

passwd ${username}

Client Use

At this point, you can connect to this server from any external machine, input your username and password, and manage the files in the bucket.

ftp ${ftp_server_ip}s3cmd

Functional Description

s3cmd is a free command line tool to upload, retrieve and manage data using the S3 protocol. It is most suitable for users familiar with command line programs and is widely used for batch scripts and automatic backup.

Installation & Usage

Applicable Operating System: Linux, MacOS, Windows

Installation Steps

1. Download installation package

https://s3tools.org/download , here uses the latest version 2.1.0 as an example

2. Unzip the installation packager

tar xzvf s3cmd-2.1.0.tar.gz

3. Move the path

mv s3cmd-2.1.0 /usr/local/s3cmd

4. Create soft link

ln -s /usr/local/s3cmd/s3cmd /usr/bin/s3cmd (use sudo if permission is insufficient)

5. Run configuration command, fill in necessary information (can also skip directly, can put in next step manual fill in)

s3cmd --configure

6. Fill in the configuration

vim ~/.s3cfg

Open the current configuration, fill in the following parameters

access_key = "TOKEN public key/API public key"

secret_key = "TOKEN private key/API private key"

host_base = "s3 protocol domain name, for example: s3-cn-bj.example.com"

host_bucket = "request style, for example: %(bucket)s.s3-cn-bj.example.com"

multipart_chunk_size_mb = 8 "us3 supported s3 protocol slice size is 8M, so can only fill in 8 here"

access_key: refer to Token public key /API public key

secret_key: refer to Token private key /API private key

host_base: refer to s3 protocol domain name

Example configuration items

[default]

access_key = "TOKEN_xxxxxxxxx"

access_token =

add_encoding_exts =

add_headers =

bucket_location = US

check_ssl_certificate = True

check_ssl_hostname = True

connection_pooling = True

content_disposition =

content_type =

default_mime_type = binary/octet-stream

delay_updates = False

delete_after = False

delete_after_fetch = False

delete_removed = False

dry_run = False

enable_multipart = True

encrypt = False

expiry_date =

expiry_days =

expiry_prefix =

follow_symlinks = False

force = False

get_continue = False

gpg_passphrase =

guess_mime_type = True

host_base = s3-cn-bj.example.com

host_bucket = %(bucket)s.s3-cn-bj.example.com

human_readable_sizes = False

invalidate_default_index_on_cf = False

invalidate_default_index_root_on_cf = True

invalidate_on_cf = False

kms_key =

limit = -1

limitrate = 0

list_md5 = False

log_target_prefix =

long_listing = False

max_delete = -1

mime_type =

multipart_chunk_size_mb = 8

multipart_max_chunks = 10000

preserve_attrs = True

progress_meter = True

proxy_host =

proxy_port = 80

public_url_use_https = False

put_continue = False

recursive = False

recv_chunk = 65536

reduced_redundancy = False

requester_pays = False

restore_days = 1

restore_priority = Standard

secret_key = "xxxxxxxxxxxxxxxxxxx"

send_chunk = 65536

server_side_encryption = False

signature_v2 = False

signurl_use_https = False

simpledb_host = sdb.amazonaws.com

skip_existing = False

socket_timeout = 300

stats = False

stop_on_error = False

storage_class =

throttle_max = 100

upload_id =

urlencoding_mode = normal

use_http_expect = False

use_https = False

use_mime_magic = True

verbosity = WARNING

website_index = index.htmlUsage Method

1. Upload file

s3cmd put test.txt s3://bucket12. Delete file

s3cmd del s3://bucket1/test.txt3. Download file

s3cmd get s3://bucket1/test.txt4. Copy file

s3cmd cp s3://bucket1/test.txt s3://bucket2/test.txtOther common operations

1. Upload directory

s3cmd put -r ./dir s3://bucket1/dir1

2. Download directory

s3cmd get -r s3://bucket1/dir1 ./dir1

3. Delete directory

s3cmd del s3://bucket1/dir1

4. Get bucket list

s3cmd ls

5. Get file list

s3cmd ls s3://bucket1

6. Retrieval of archived file

s3cmd restore s3://bucket1rclone

Functional Description

rclone is a command line program for managing file on cloud storage, supporting the s3 protocol.

Installation & Usage

Installation Steps

curl https://rclone.org/install.sh | sudo bash

//refer to https://rclone.org/install/Configuration

rclone configConfiguration Reference

[s3] //This can be filled in with s3 and other custom names as command prefixes

type = s3

provider = Other

env_auth = false

access_key_id = xxxxxxxx

secret_access_key = xxxxxxxxxxx

endpoint = http://s3-cn-bj.example.com //refer to

location_constraint = cn-bj

acl = private

bucket_acl = private // public/private

chunk_size = 8M //us3 supported s3 protocol slice size are 8M, so can only fill in 8 hereaccess_key: refer to Token public key /API public key

secret_key: refer to Token private key /API private key

endpoint: refer to s3 protocol domain name

Usage Method

Note: the following commands use ‘remote’ as an example for the prefix, in use it must be modified accordingly

1. View all buckets

rclone lsd remote:2. Get file list

rclone ls remote:bucket3. Upload file

rclone copy ./test.txt remote:bucket4. Delete file

rclone delete remote:bucket/test.txtCyberduck

Function Description

Cyberduck is a cross-platform file manager with s3 support for macos.

Installation and use

Installation Steps

Download: https://cyberduck.io/download/

Open the software to useConfiguration

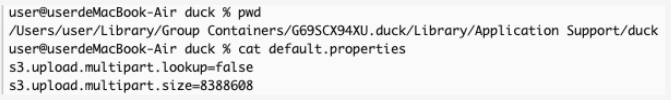

The Cyberduck configuration directory is:

~/Library/Group Containers/G69SCX94XU.duck/Library/Application Support/duck/

Create a file named “default.properties” in the configuration directory.Configuration Reference

The contents of the file are:

s3.upload.multipart.lookup=false

s3.upload.multipart.size=8388608The finished result is shown below

Supported Operations Description

Supports upload, download, delete, list, sync, pre-signed URL, etc.

Does not support move, copy, rename, create encrypted libraries, restore, archive, etc.