GPU Plugins

1. Introduction

UK8S implements GPU sharing using the open-source component HAMi, including:

- Video memory partitioning

- Computing power partitioning

- Error isolation

2. Deployment

⚠️ Check that the system meets the following requirements before installation:

- NVIDIA drivers: Version ≥ 440

- Kubernetes version: ≥ 1.16

- glibc: Version ≥ 2.17 and < 2.3.1

2.1 Label GPU nodes to be scheduled by HAMi:

kubectl label nodes xxx.xxx.xxx.xxx gpu=on

2.2 Helm installation

⚠️ Helm version requirement: ≥ 3.0. Check the Helm version after installation:

helm version

2.3 Obtain the chart

Download and extract the chart package:

wget https://docs.ucloud.cn/uk8s/yaml/gpu-share/hami.tar.gz

tar -xzf hami.tar.gz

rm hami.tar.gz

2.4 Install HAMi

helm install hami ./hami -n kube-system

Check the installation result:

kubectl get po -AWhen the installation is successful, the output shows the pod status:

hami-device-plugin-l4jj4 2/2 Running 0 45s

hami-scheduler-59c7f4b6ff-7g565 2/2 Running 0 3m54s

2.5 Usage

Create a Pod via a YAML file. Note that in resources.limits, besides the traditional nvidia.com/gpu, add nvidia.com/gpumem and nvidia.com/gpucores to specify the video memory size and GPU computing power.

- nvidia.com/gpu: Number of vGPUs requested (e.g., 1).

- nvidia.com/gpumem: Requested video memory size (e.g., 3000M).

- nvidia.com/gpumem-percentage: Video memory request percentage (e.g., 50 for 50% of video memory).

- nvidia.com/gpucores: Computing power percentage of each vGPU relative to the actual GPU.

- nvidia.com/priority: Priority level (0 for high, 1 for low; default is 1).

High-priority tasks: If sharing a GPU node with other high-priority tasks, their resource utilization is not limited by resourceCores. In other words, when only high-priority tasks exist on a GPU node, they can utilize all available resources on the node.

Low-priority tasks: If a low-priority task is the only one occupying the GPU, its resource utilization is also not limited by resourceCores. This means low-priority tasks can utilize all available node resources when no other tasks share the GPU.

3. Monitoring

3.1 If the monitoring center is not enabled

Enable the monitoring center.

3.2 If the monitoring center is already enabled

⚠️ If the monitoring center version is 1.0.6 > version >= 1.0.5-3 or version > 1.0.6, the following deployment files are installed by default. Skip the deployment in 3.2.1.

3.2.1 Deploy Dcgm-Exporter

kubectl get po -A3.2.2 Add monitoring targets in UK8S

In the UK8S monitoring center’s monitoring targets, add monitoring targets in UK8S as shown in the diagram.

3.3 Grafana monitoring

After adding monitoring targets in UK8S and logging into Grafana:

- Download the JSON file.

- Select the ’+’ icon in the left navigation bar.

- Click Import.

- Paste the downloaded JSON content into the second input box.

- Click Load.

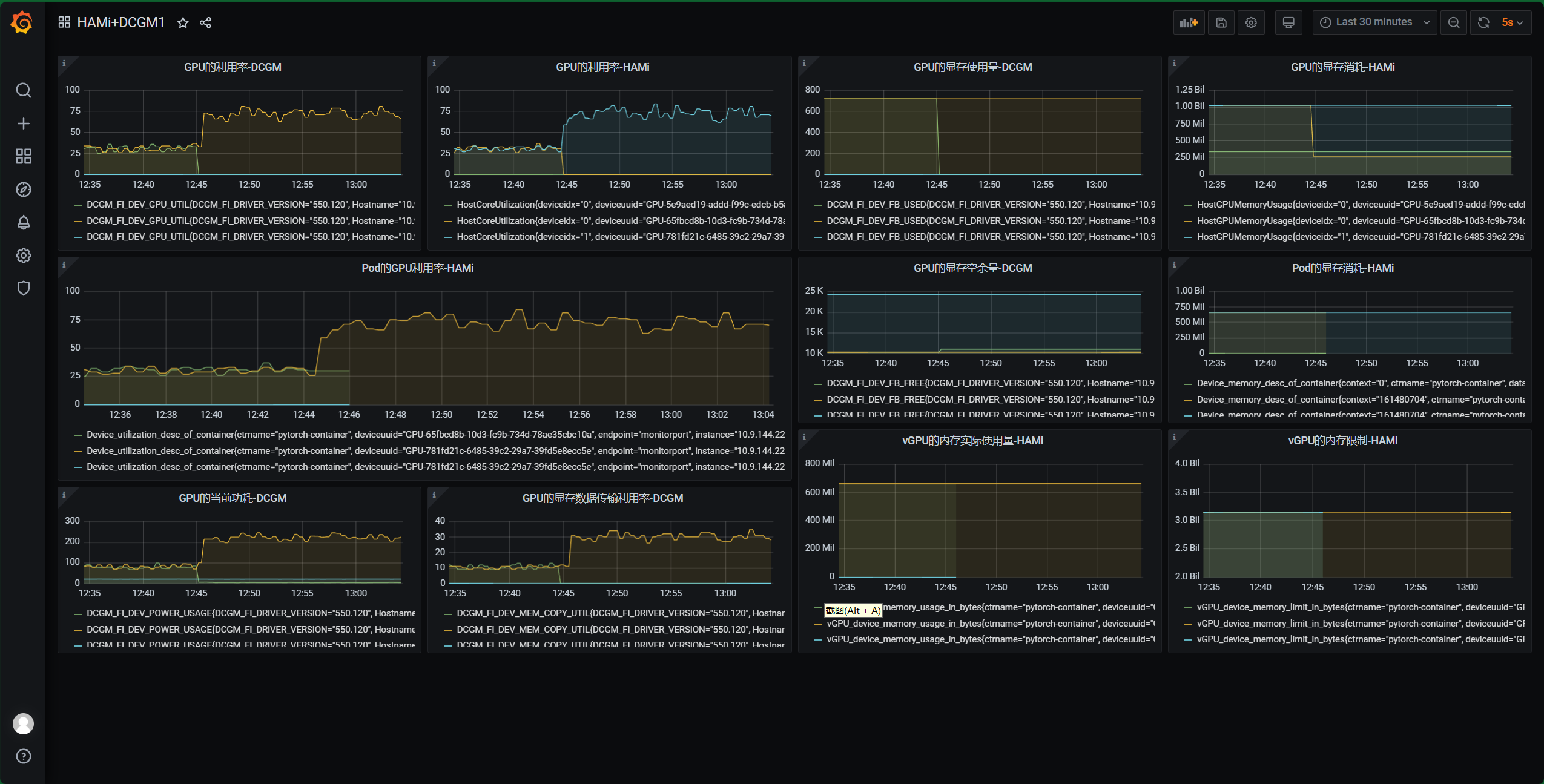

The following is a schematic diagram of Grafana monitoring for HAMi:

3.4 Monitoring indicators

In addition to the indicators from the DCGM plugin (specifically described in the GPU monitoring documentation), HAMi also supports the following indicators:

- Device_memory_desc_of_container: Real-time device memory usage in the container, used to monitor the memory consumption of devices (e.g., GPU) for each container.

- Device_utilization_desc_of_container: Real-time device utilization rate of the container, used to monitor the usage of internal devices (e.g., GPU workload).

- HostCoreUtilization: Real-time core utilization rate of the host, used to monitor the CPU core usage of the host, including multiple workloads from containers or virtualization.

- HostGPUMemoryUsage: Real-time memory usage of GPU devices on the host, used to monitor memory consumption by containers or tasks using GPUs on the host.

- vGPU_device_memory_limit_in_bytes: Memory limit for a container’s vGPU (virtual GPU) device, in bytes. This is the maximum GPU memory the container can use.

- vGPU_device_memory_usage_in_bytes: Actual memory usage of a container’s vGPU device, in bytes, used to monitor vGPU memory consumption of the container.

4. Testing

4.1 Node GPU Resource Verification

In the test environment, the number of physical GPUs is 1. However, since HAMi’s default configuration sets the expansion ratio to 10x, theoretically, 1 * 10 = 10 GPUs can be viewed on the node.

Verification Command

Execute the following command to get the Node’s GPU resource quantity:

kubectl get node xxx -oyaml | grep capacity -A 8

Sample Output:

capacity:

cpu: "16"

ephemeral-storage: 102687672Ki

hugepages-1Gi: "0"

hugepages-2Mi: "0"

memory: 32689308Ki

nvidia.com/gpu: "10"

pods: "110"

ucloud.cn/uni: "16"

4.2 GPU Memory Test

The configuration of gpu-mem-test.yaml is as follows:

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

spec:

containers:

- name: ubuntu-container

image: uhub.service.ucloud.cn/library/ubuntu:trusty-20160412

command: ["bash", "-c", "sleep 86400"]

resources:

limits:

nvidia.com/gpu: 1 # Request 1 vGPU

nvidia.com/gpumem: 3000 # Allocate 3000MiB memory per vGPU (optional, integer)

nvidia.com/gpucores: 30 # Allocate 30% computing power per vGPU (optional, integer)Create the Pod using this configuration. It should start normally. Verification steps:

kubectl get po

Expected output:

NAME READY STATUS RESTARTS AGE

gpu-pod 1/1 Running 0 48s

Enter the Pod and execute the nvidia-smi command to view GPU information, and verify that the video memory limit is 3000M as requested in the resources.

4.3 Multiple Pods Single GPU Utilization Test

Test Command

First, create hami-npod-1gpu.yml with the following content. Replace the GPU node IP to specify the target GPU node.

This configuration creates n Pods (controlled by modifying the replica count in the YAML). Manually delete them after testing.

apiVersion: apps/v1

kind: Deployment

metadata:

name: hami-npod-1gpu

spec:

replicas: 3 # Create three identical Pods (adjust as needed)

selector:

matchLabels:

app: pytorch

template:

metadata:

labels:

app: pytorch

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- xxx.xxx.xxx.xxx # Replace with actual GPU node IP

containers:

- name: pytorch-container

image: uhub.service.ucloud.cn/gpu-share/gpu_pytorch_test:latest

command: ["/bin/sh", "-c"]

args: ["cd /app/pytorch_code && python3 2.py"]

resources:

limits:

nvidia.com/gpu: 1

nvidia.com/gpumem: 3000

nvidia.com/gpucores: 25Test Results

Monitoring shows that the long-term average computing power consumption of the three Pods aligns with the configured limits, though short-term fluctuations may occur.