kube-proxy Mode Selection

kube-proxy is a critical component in Kubernetes, responsible for load balancing between Services and their backend Pods (Endpoints). It supports three operating modes, each with different implementation technologies: userspace, iptables, or IPVS.

The userspace mode is deprecated due to performance issues. This section focuses on comparing and selecting between iptables and IPVS modes.

How to choose

-

For large-scale clusters, especially when the number of Services may exceed 1000, IPVS is recommended. (See the following test data for details)

-

For medium-scale clusters, where there are not many Services, iptables is recommended.

-

If the client will have a large number of concurrent short connections, iptables is currently recommended, for reasons see the note below.

Remark: In the rolling update of the Kubernetes cluster using the IPVS mode, if a client sends a large number of short connections within a short time (two minutes), the client port will be reused, causing the node to receive request messages from the client with the same network five-tuple, triggering IPVS to reuse the Connection, which may result in the messages being forwarded to a Pod that has been destroyed, causing business abnormalities.

Official issue: https://github.com/kubernetes/kubernetes/issues/81775

How to switch

Please refer to kube-proxy mode switching

iptables mode

iptables is a Linux kernel function, it is an efficient firewall and provides the ability for packet handling and filtering. It can install a series of rules on the core data packet processing pipeline with Hooks. In the iptables mode, kube-proxy realizes its NAT and load balancing function in the NAT pre-routing Hook. This method is simple and effective, relying on mature kernel functions, and can get along well with other applications that cooperate with iptables.

IPVS mode

IPVS is a Linux kernel function for load balancing. In the IPVS mode, kube-proxy uses IPVS for load balancing instead of iptables. The design of IPVS is used for load balancing for a large number of services, it has an optimized set of APIs and uses optimized lookup algorithms, rather than looking for rules from the list, so its performance is better in large-scale scenarios compared to IPVS.

Mode comparison

Whether it is the iptables mode or the IPVS mode, the forwarding performance is related to the number of Services and the corresponding number of Endpoints. The reason is that the number of iptables or IPVS forwarding rules on the Node matches the number of svc and ep.

The main difference between the forwarding performance of IPVS and iptables is reflected in the process of TCP three-way handshake connection, so in the scenario of a large number of short connection requests, the performance difference between the two is particularly outstanding.

When the number of Services and Endpoints is small (dozens to hundreds of Services, hundreds to thousands of Endpoints), the forwarding performance of the iptables mode is slightly better than that of IPVS.

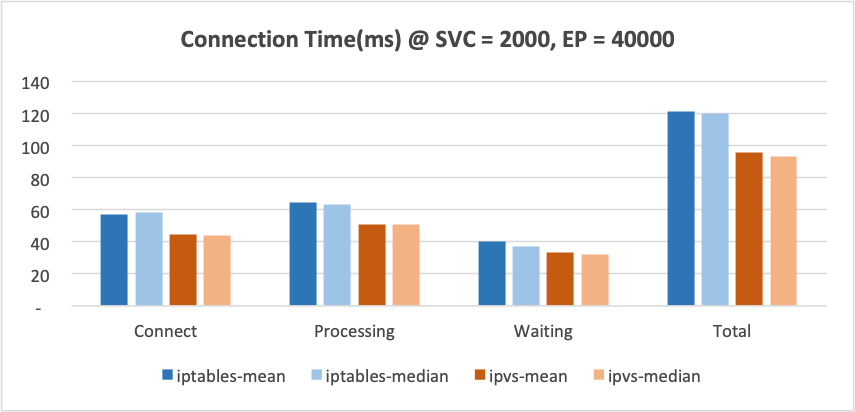

As the number of Services and Endpoints gradually increases, the forwarding performance of the iptables mode significantly decreases, while the forwarding performance of IPVS mode remains relatively stable.

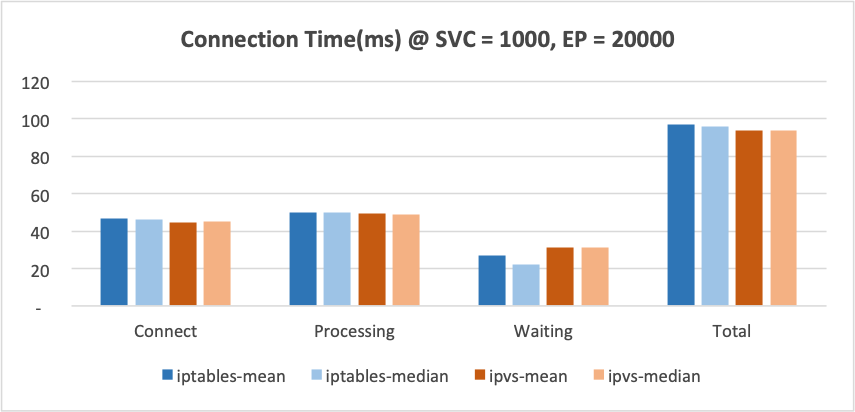

When the number of Services reaches about 1000 and the number of Endpoints reaches about 20000, the forwarding performance of the iptables mode begins to be lower than IPVS. As the number of Services and Endpoints continues to increase (thousands of Services and tens of thousands of Endpoints), the performance of IPVS mode drops slightly, while the performance of iptables mode drops sharply.

Test cases

We use 2 Nodes as test nodes, one Node KubeProxy uses the iptables mode, noted as N1; the other KubeProxy uses the IPVS mode, noted as N2.

Prepare the benchmark client ab on N1 and N2, with 1000 concurrent connections, and a total of 10000 short connection requests need to be completed.

Run the test command separately but not at the same time on N1 and N2, and observe the results returned by ab:

Connection Times (ms)

min mean[+/-sd] median max

Connect: 1 38 8.4 38 59

Processing: 10 41 9.7 40 67

Waiting: 1 28 9.0 28 56

Total: 51 79 7.5 78 101Change the number of Services continuously, 100, 500, 1000, 2000, 3000, 4000, observe the results and collect data.

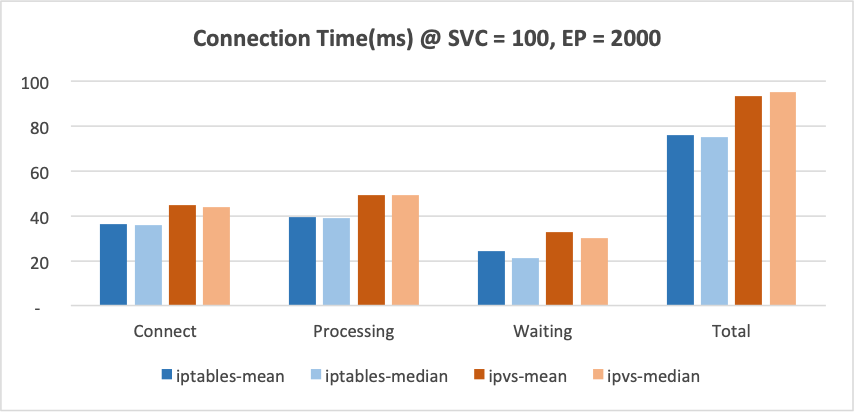

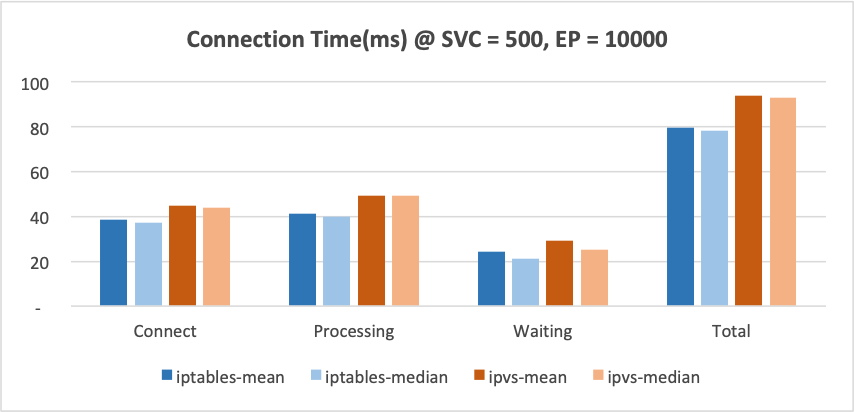

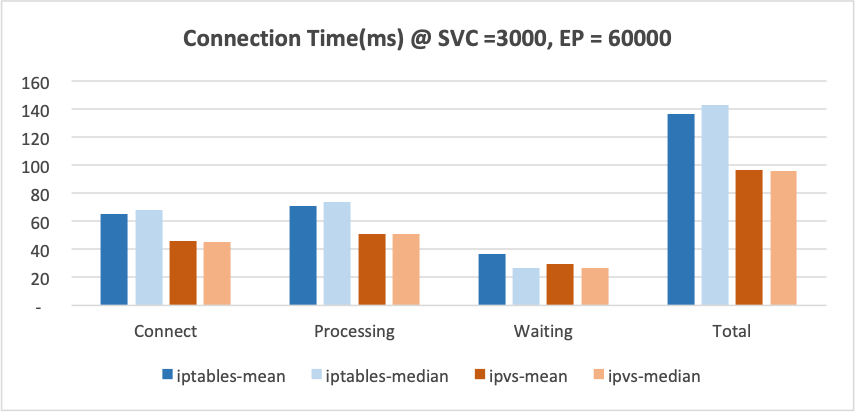

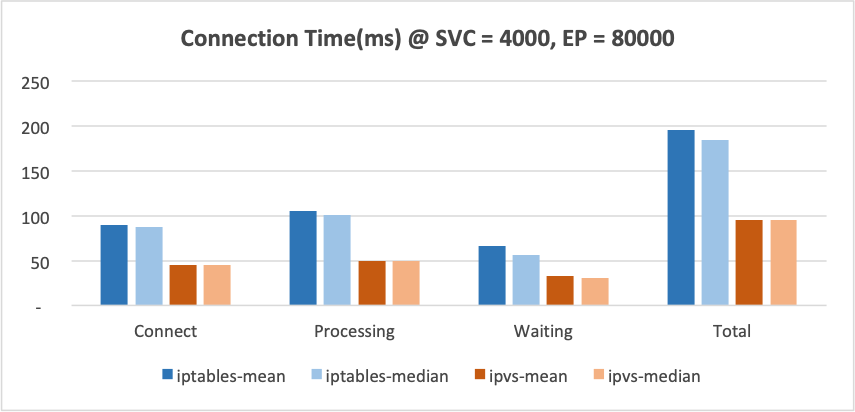

The following are the performance test data for IPVS and iptables by the UK8S team.

As can be seen, when the number of Services is 100 and 500, the forwarding performance of iptables is better than that of IPVS; when the number of Services reaches 1000, they are roughly equal; as the number of Services continues to increase, the performance advantage of IPVS becomes more and more obvious.