Llama3-8B-Instruct-Chinese Quick Deployment

Introduction

Llama 3, developed by Meta, is trained on a 15-trillion-token dataset—7 times larger than that of Llama 2—including 4 times more code data. The pre-training dataset also contains 5% non-English data, supporting up to 30 languages in total, which gives it a greater advantage in aligning capabilities across other languages. Llama 3 Instruct is further optimized for conversational applications, trained using supervised fine-tuning (SFT), rejection sampling, proximal policy optimization (PPO), and direct policy optimization (DPO), with over 10 million human-annotated data points. The model mentioned in this article is the Chinese instruction-fine-tuned version (Llama3-8B-Instruct-Chinese), which demonstrates relatively strong performance in Chinese.

Quick Deployment

Log in to the XXXCloud console (https://console.ucloud-global.com/uhost/uhost/create ). Select “GPU Type” and “High Cost-Effective Graphics Card 6” for the machine type. Details such as the number of CPUs and GPUs can be configured as needed.

Minimum recommended configuration: 16-core CPU, 32G memory, 1 high cost-effective graphics card-6 GPU.

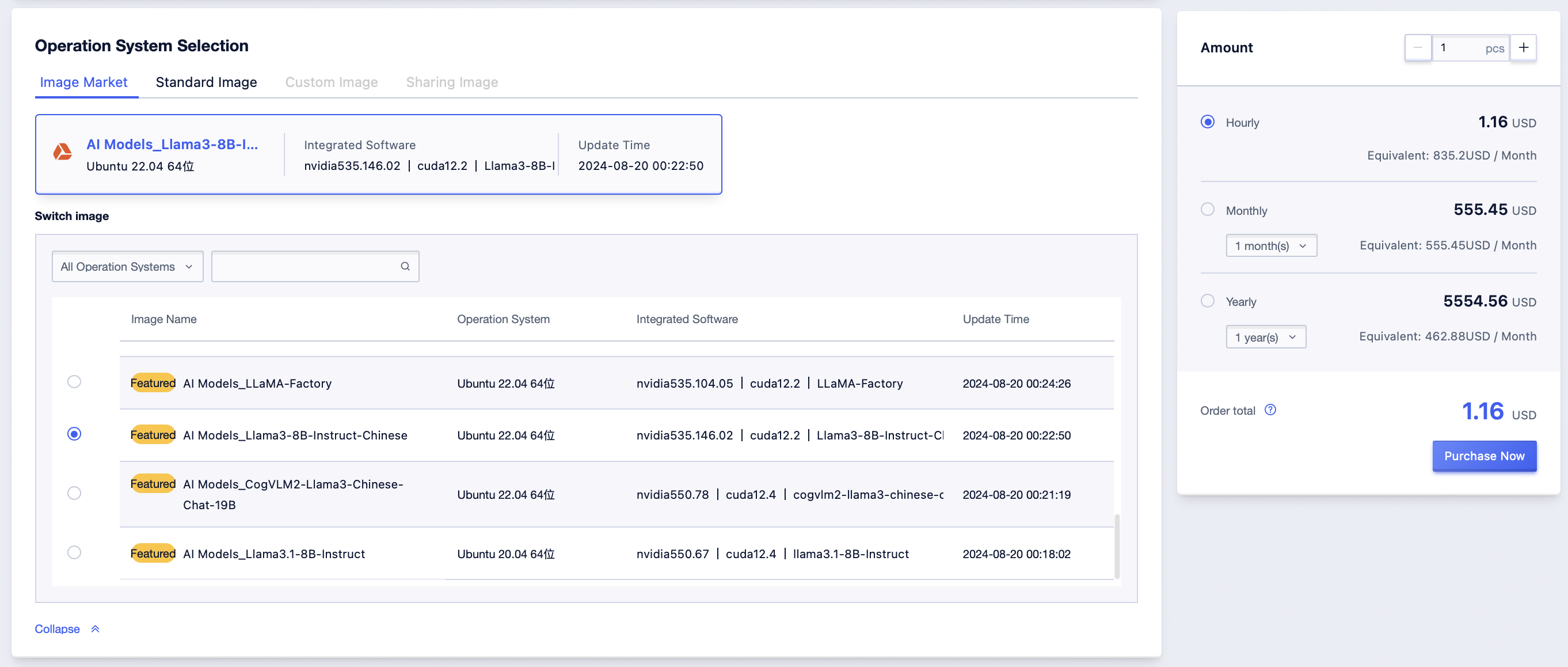

Select “Image Market” for the image, search for “Llama3” for the image name, and select this image to create a GPU UHost.

After the GPU UHost is created successfully, log in to the GPU UHost.